What is Routing Protocol?

Let us try to understand this concept

Suppose you want to go to your shop from home. You book a cab or take an auto to your shop. In the path of your journey, your auto driver encounters several signboards which help him/her to take the proper turn or best path, or in the case of a cab, Google Maps will help you in choosing the best route. In this likeness consider yourself as the Data the auto or cab as the Routed Protocol, and the signboards or the GPS installed in your driver’s phone as the Routing Protocol.

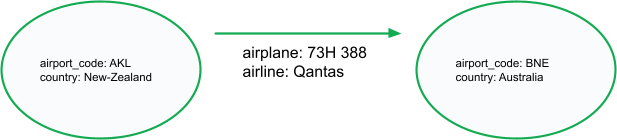

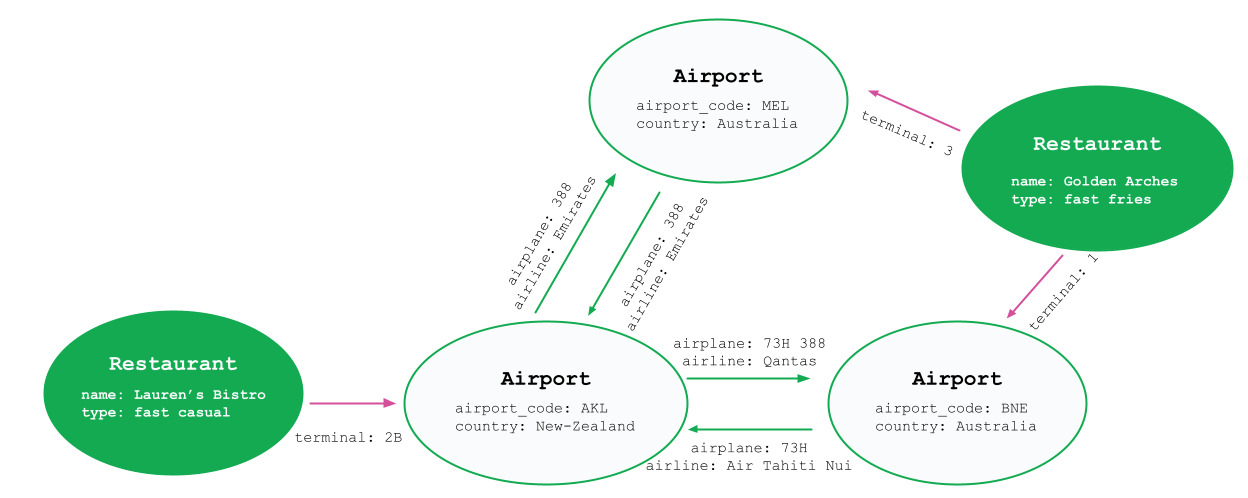

Similarly, It provides appropriate addressing information in its internet layer or network layer to allow a packet to be forwarded from one network to another.

The IP network is classified into two categories: Interior Gateway and Exterior Gateway Protocol

Interior gateway protocols are used inside an organization’s network and are limited to the border router. Exterior gateway protocols are used to connect the different Autonomous Systems (ASs). A simple definition that fits most of the time defines the border router as a router that has a foot in two worlds: one going to the Internet and another that is placed inside the organization and that’s why its name is, border router.

Purpose and Use Cases

With Dijkstra’s Algorithm, you can find the shortest path between nodes in a graph. Particularly, you can find the shortest path from a node (called the “source node”) to all other nodes in the graph, producing a shortest-path tree.

This algorithm is used in GPS devices to find the shortest path between the current location and the destination. It has broad applications in industry, especially in domains that require modeling networks.

What is OSPF(Open Shortest Path First)?

OSPF is a link-state protocol that uses a shorted path first algorithm to build and calculate the shortest path to all known destinations. The shortest path is calculated with the use of the Dijkstra algorithm. The algorithm by itself is quite complicated. This is a very high-level, simplified way of looking at the various steps of the algorithm.OSPF is not a CISCO proprietary protocol like EIGRP.OSPF always determines the loop-free routes. If any changes occur in the network it updates fast.

These are some of the important points related to OSPF.

- The OSPF is an open standard protocol that is most popularly used in modern networks.

- It is used to find the best path between the source and the destination router using its own Shortest Path First.

- Various protocols are used for the shortest path. But in real life mostly problems are undirected graph-like nature. OSPF using Dijkstra’s algorithm solved the shortest path problem in both types of problems i.e. directed and undirected graphs.

Shortest Path First Algorithm

OSPF uses a shorted path first algorithm to build and calculate the shortest path to all known destinations. The shortest path is calculated with the use of the Dijkstra algorithm. The algorithm by itself is quite complicated.

Routing protocols like OSPF calculate the shortest route to a destination through the network based on an algorithm.

Why there is a need for OSPF when there is RIP protocol?

The first routing protocol that was widely implemented, the Routing Information Protocol (RIP), calculated the shortest route based on hops, that is the number of routers that an IP Packet had to traverse to reach the destination host. RIP successfully implemented dynamic routing, where routing tables change if the network topology changes. But RIP did not adapt its routing according to changing network conditions, such as data transfer rate. Demand grew for a dynamic routing protocol that could calculate the fastest route to a destination. OSPF was developed so that the shortest path through a network was calculated based on the cost of the route,

OSPF is a routing protocol. Two routers speaking OSPF to each other exchange information about the routes they know about and the cost for them to get there.

When many OSPF routers are part of the same network, information about all of the routes in a network is learned by all of the OSPF routers within that network—technically called an area.

Each OSPF router passes along information about the routes and costs they’ve heard about to all of their adjacent OSPF routers, called neighbors.

OSPF routers rely on the cost to compute the shortest path through the network between themselves and a remote router or network destination. The shortest path computation is done using Dijkstra’s Algorithm This algorithm isn’t unique to OSPF. Rather, it’s a mathematical algorithm that happens to have an obvious application to networking.

The routers work on the third layer of our OSI model. OSPF is a routing protocol. When data move across multiple networks, IP routing helps to determine the path of data by setting some protocols to reach the source destination. RIP (Routing Information Protocol) and OSPF (Open Shortest Path First) Protocol are types of dynamic routing.

Comparing the difference between Static and Dynamic Routing :

– Static routing is for small networks like small scale organization that has predicted number of users and static or minimum bandwidth usage

– Dynamic Routing is used in the case of large networks because of its capabilities like it keeps changing and updating along with network topologies. Dynamic Routing Protocols dynamically discover network destinations.

OSPF maintains information in three tables:-

Topology Table contains the entire road map of the network with all available OSPF routers and calculated best and alternative paths.

Neighbor Table that contains information about neighboring routers so that information can be interchanged.

Routing Table where the current working best paths will store and it is used to forward the data traffic between neighbors.

How Dijkstra’s Algorithm Works With Example

Consider the map below. The cities have been selected and marked from alphabets A to F and every edge has a cost associated with it.

We need to travel from Bengaluru to all other places and we have to identify what are the shortest paths with minimal cost from Bengaluru to other destinations.

- Convert the problem to its graph equivalent.

Upon conversion, we get the below representation. Note that the graph is weighted and undirected. All the cities have been replaced by the alphabets associated with them and the edges have the cost value (to go from one node to another) displayed on them.

- Assign a cost to vertices.

Assign a cost of 0 to source vertex and ∞∞ (Infinity) to all other vertices as shown in the image below.

Maintain a list of unvisited vertices. Add all the vertices to the unvisted list.

- Calculate the minimum cost for neighbors of the selected source.

For each neighbor A, C, and D of source vertex selected (B), calculate the cost associated to reach them from B using the formula. Once this is done, mark the source vertex as visited (The vertex has been changed to blue to indicate visited).

Minimum(current cost of neighbor vertex, cost(B)+edge_value(neighbor,B))

- For neighbor A: cost = Minimum(∞∞ , 0+3) = 3

- For neighbor C: cost = Minimum(∞∞ , 0+1) = 1

- For neighbor D: cost = Minimum(∞∞ , 0+6) = 6

- Select the next vertex with the smallest cost from the unvisited list.

Choose the unvisited vertex with minimum cost (here, it would be C) and consider all its unvisited neighbors (A, E, and D), and calculate the minimum cost for them.

Once this is done, mark C as visited.

Minimum(current cost of neighbor vertex, cost(C)+edge_value(neighbor,C))

- For neighbor A: cost = Minimum(3 , 1+2) = 3

- For neighbor E: cost = Minimum(∞∞, 1+4) = 5

- For neighbor D: cost = Minimum(6 , 1+4) = 5

Observe that the cost value of node D is updated by the new minimum cost calculated.

- Repeat step 4 for all the remaining unvisited nodes.

Repeat step 4 until there are no unvisited nodes left. At the end of the execution, we will know the shortest paths from the source vertex B to all the other vertices. The final state of the graph would be like below.

Where OSPF is Used?

OSPF is an Interior Gateway Protocol (IGP). As the name suggests, these are used for internal network routing. This would typically be between switches and routers in the same location. Sometimes OSPF has also been used in Layer 2 connections between offices.

OSPF is the most widely used but it is not the only choice. With that said, it is the most standardized IGP and that allows for optimal vendor interoperability. OSPF is primarily used for internal routing because it’s a link-state routing protocol.

OSPF is a Link State protocol based on cost under a single routing solution that maintains information of all nodes on the network. Since each node holds the entire network structure information, each node can independently calculate the path to reach the destination by adopting the shortest path algorithm namely Dijkstra’s algorithm as discussed earlier.

The overhead of being a link-state protocol is minimized due to the smaller topology. Not to say they cannot get large but compared to the topology of internet routing, they are usually minimal.

The benefit to being a link-state protocol is its ability to highly engineer traffic routing due to its in-depth understanding of the topology. Each router has a full view of how each router is connected. The downside is that many convergence scenarios require a full or partial table recalculation.

Thanks For Reading this article…!!!!